Causes and Reasons: The Mechanics of Anticipation

C. George Boeree

Causes and Reasons: The Mechanics of Anticipation

C. George Boeree

The concept of anticipation shows considerable potential for

unifying

the causal and teleological aspects of psychology. As a step

towards

exploring that potential, this paper presents a network model of

cognition

based on stratificational linguistics theory, including "feedforward"

features

that provide a likely mechanism for anticipation. Speculations on

how the model might lead to a broader understanding of the mind's

structure

are included.

The idea of anticipation has created quite a stir in the psychological community over the last few decades. It is a long overdue recognition that human behavior is predominantly purposive.

In fact, of course, the idea has been around for a long time, in Husserl's phenomenology, Adler's individual psychology, and Tolman's cognitive behaviorism, for example. We can go back to Herbart's idea of apperception, if we like, or all the way to Aristotle's entelechy.

The appeal of the idea of anticipation is clear: It corresponds well with our introspective experience; It makes possible a degree of individual autonomy or "free will;" It makes empathy and similar concepts more viable; It animates social role-playing; It brings morality back for psychological investigation; And so on.

What has prevented us from more quickly embracing the idea of anticipation is that it conflicts with at least our simpler notions of cause and effect. Inasmuch as we have endeavored to make psychology a respectable science, we have emphasized simple causality and ignored anticipation and teleological notions generally.

I am sure most psychologists using the idea of anticipation have a sense that there is nothing intrinsically incompatible about anticipation and causality. The purpose of this paper is to make the connection explicit, that is, to suggest a mechanics of anticipation.

A Framework

My understanding of the interaction between the mind and the world is essentially George Kelly's, and is represented by Figure 1.

In the context of ongoing events, the person uses his or her knowledge of and previous experience with similar events to generate anticipations of how ongoing events will unfold. These anticipations are continually compared with our sensations. Many anticipations are of a sort that manifest themselves as actions.

When anticipations fail to predict sensations, or produce ineffective actions, or conflict among themselves, our understanding of the events before us is clearly deficient. We generate more anticipations, attempt other actions, and attend to our sensations, in an effort to return to relatively problem-free interaction. When we succeed, some portion of the steps taken is "stamped into" our understanding. This process we might call adaptation.

These processes are intrinsically affectful. When interaction is problematic, we feel distress. When the problem is resolved, whether through adaptation or avoidance, we feel delight. Details of extent, duration, context, and so on, provide us with the variety of emotions we are familiar with. This approach to affect is common to Kelly (1955), Tomkins (1962, 1963), and Festinger (1957), and can be traced back at least to Spinoza (1930).

Networks

The linguist Hjelmslev (1961) once suggested that the mind contains nothing: No thing, that is, but relationships. This finds support in both the physical structure of the nervous system and the phenomenal processes of contrast and association.

It suggest further that we might model the mind's structures with more explicit structures, whether they be mathematical models, verbal ones, diagrams, computer programs, or whatever. It is, in fact, a common assumption -- not yet proven -- that any network structure can model any other network structure. One such model was invented by Sidney Lamb (1966) for describing linguistic relationships as a part of his theory of stratificational linguistics.

Language, according to Lamb, is a set of relationships that tie together two interactions: the general one of mind and world, and a particular subset of that interaction, involving the production and perception of certain sounds and marks. Since the world of general interaction is so rich and the phonetic one relatively poor, and since the first is multidimensional (to say the least) and the second linear, there must be rule systems that govern the translation of one to the other.

One might imagine these rule systems as layered one on top of the other, and at right angles to the set of connections between general interaction and phonetic interaction, as in Figure 2. Lamb suggests six layers of rules: hypersememic, sememic, lexemic, morphemic, phonemic, and hypophonemic. A sympathetic linguist, Geoffrey Leech (1974), suggests the traditional semantic, syntactic, and phonetic, but each with a surface and deep aspect.

Lamb, noting that linguistic signals travel both "up" and "down" the network -- representing interpretation and generation respectively -- suggested that the network permit signals to travel in both directions along any line. This is not an unreasonable suggestion: Neurons on the surface of the cerebral and cerebellar cortices are connected so as to permit signals to travel across those surfaces in any direction. Further, from an information-processing point of view, bidirectionality makes feedback and -- as we shall see -- feedforward intrinsic features of the model.

Nodes

The network model consists of lines joined by logical nodes of various kinds. The descriptions of nodes that follow are variations on Lamb's, and are illustrated in Figure 3.

The first three nodes are the most basic:

AND

OR

ANDOR

Other possibilities result in no output. You will notice that the OR acts like the AND when entered from "above" (a). The conditions that decide between b and c must come afterward.

The next two are also simple:

TRIANGLE

CIRCLE

The triangle, known in stratificational linguistics as "the ordered and," allows one thing to be done ("downward" from a), then a second thing, and then a return "up" the network for further processing. The circle is a simple divergence node, for use in situations where the information is to be used in a variety of other areas.

The next nodes involve the simple feedback of "message delivered," and simple feedforward:

ACT

SET

The notation "a-on" signifies that a small loop has been activated that acts as if a signal continued to come into a; "a-off" signifies the disactivation of that loop.

These loops are also a part of the final two nodes:

DIAMOND

TEE

The diamond acts like a gate on upward and downward information. As we shall see, it is usually found at the intersection of rule systems and non-rule systems. And the tee (a variation of Lamb's "upward or") keeps track of ordered processes.

It should also be noted that and, or, triangle, circle, and tee nodes may, for convenience, have additional lines. Many other nodes are, of course, possible; I have found these the most useful.

Examples

Three examples will bring the model to life: The first is a small portion of a naive taxonomy of plants (Figure 4).

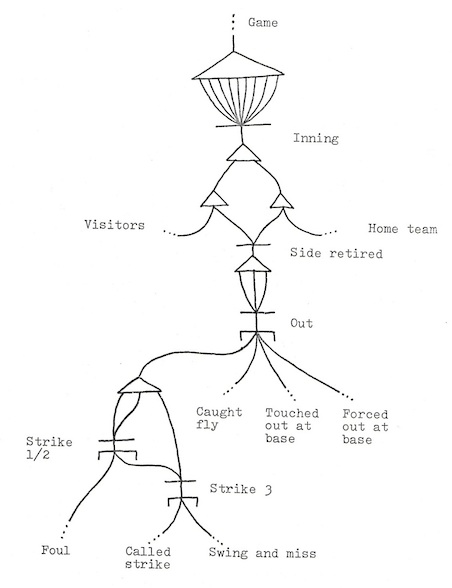

The second, Figure 5, illustrates a portion of the rules of baseball. Note in particular the use of tee nodes to "count" innings, outs, strikes, and so on. Begin at the top and start tracing your way down, and you will find yourself passing through nodes indicating the first inning, first side up, looking for the first out, looking for the first strike, and all the way to the feed-forward expectation of rather specific events such as caught fly, touched out at base, foul, or swing and miss. When one of these expectations is realized, work your way back up the diagram, to looking for a second strike or out, and so on.

Our final illustration (Figure 6) is the most complex. It is a diagram of a small piece of Latin. At the top is a part of a rule system, lexotactics, concerned with the uses of nouns and their cases: Nouns can be nominative, genitive, dative, accusative, or ablative, and in singular or plural form. At the bottom is another rule system, morphotactics, concerned with (among many other things) the specific case endings for specific noun declensions. In between is a tiny portion of the "vertical" network extending from concepts to spoken words, here only between its points of contact with the two rule systems.

Alternative networks

Before coming to my main point, I must point out that other types of nodes are quite possible. For example, we can construct networks with only the following three nodes and unidirectional lines:

Fork

And

Or

Figure 7 shows these nodes, as well as how the and node and set node of our original model might be constructed with these new nodes.

Or we could construct networks of nodes that are capable of large numbers of inputs and outputs but differ only as to whether they are inhibitory or excitatory -- i.e. something like the nervous system itself.

The Point

It is the "feed-forward" nodes -- set, diamond, and tee -- that bring us back to anticipation. In the examples above, we actually anticipate the next strike or out or inning; Or, as we seek to identify a conifer, we look to discover the type of needles or cones it has; Or, as we read Latin, we expect certain endings on certain nouns in certain contexts.

I suggest that the set of all such nodes that are "on" at any particular moment constitutes the anticipation of that moment.

Speculation: A Model of the Mind

The implications of this understanding of anticipation on our understanding of the mind's structure are quite far-reaching. In particular, we can look at the apparent structure of the linguistic portion of the mind, as suggested by Lamb and sympathetic linguists, and generalize.

For example, we have here a model for levels of processing: We can postulate a very surface layer of anticipatory nodes, permitting the anticipation of very specific events, as when we imagine the face of a loved one. This would be analogous to the lowest level of language processing, the anticipation (and generation) of the sounds of language.

We can also postulate very abstract layers of anticipation, such as expecting the best -- or the worst -- from people. This would be analogous to the highest levels of linguistic processing.

And we can postulate layers of anticipatory nodes between these two, each layer anticipating events in the layer below it. And we may seek to discover the structures -- rule systems and taxonomies -- behind behaviors and experiences in the same way that linguists have discovered the phonetics, syntax, and semantics behind our speaking and understanding language.

Ideas and Images

One small step in this direction is to consider the contrast between ideas and images. Images may be understood as the activity of anticipatory nodes near the "bottom" -- i.e. "sensorimotor" end -- of our mental structure. An image is then the anticipation of a highly specific set of sensations.

Given a "white" sensory field (white light or white noise, for examples), image anticipation will select from that field the expected qualities, giving us a "pastel" icon that, in circumstances of minimal or unusual information, could be mistaken for an actual event. Without the white field, the image will remain an anticipation only, a perceptual set.

Note, however, that there are plenty of other "white" backgrounds that make images near-perceptions: Bodily signals such as those coming from muscle tensions or associated with emotions can give one the feeling that something expected is in fact present!

Ideas, on the other hand, reflect the activity of anticipatory nodes higher in the mind's structure, and are the main ingredient of imageless thought. The idea of "horse" may manifest itself at any moment with the image of some particular horse, but need not. Ideas should not be confused with "fuzzy" perceptions.

Sensations and actions

There must also be a layer of processing "beyond" the lowest levels of anticipation -- a sensorimotor layer that prepares the raw material of events for anticipation. Phenomenologically, the world is never truly a "buzzing, blooming confusion," and research with infants (e.g. Bower and Wishart, 1972) suggests that it never was. Likewise, physiological research has long shown processing of information at practically the first instant of neural activity, such as lateral inhibition in the retina.

I would expect the sensory network to resemble the network in Figure 8. With it is an example concerning the perception of the letter b. Notice that the most precise anticipation of the b occurs closest to the stimulus itself: Even at this low level, the most "abstract" occurs at the highest point.

This model clearly resembles Konorski's (1967) model of sensory analyzers. He further suggests that there should be analyzers for each sense with a number of subcategories (e.g. "small manipulable object vision" and "musical melody audition"), inferred from the specificity of lost functions.

There should, of course, be action-synthesizers analogous to sensory-analyzers, and they likely work in close conjunction with sensory-analyzers. Neisser (1976), for example, points out how scanning is as essential to vision as similar movement is to touch.

Constructs

As you can see in Figure 8, individual events can end up lumped together because of a lack of discrimination, forming what we might call a protocategory. The lack of discrimination can be a matter of simple error -- strands of spaghetti are hard to tell apart -- or a matter of being anticipated at a more "abstract" level.

This is a better meaning for "fuzzy" perception: We may be looking for a very precise, one-of-a-kind, letter b; Or we may be looking for anything vaguely like it, a mark on a paper. Developmentally, it is curious that we begin by seeing things more abstractly!

These protocategories then become primitive features which allow us to develop "true" categories. We can have the category of birds, for example, because we can have the protocategory of feathers. We become capable, on other words, of having ideas that are more than merely "fuzzy" images.

Now, an event already placed into one category because of some feature or set of features, can be examined for the presence or absence of some other feature or set of features. This effectively bifurcates the category and establishes a contrast or differentiation commonly called a construct. Each end of the construct may be used in turn as a category or as a feature of even more complex categories.

So, for example, "humanity," depending on the presence or absence of features such as "maleness," "femaleness," "maturity," or "immaturity" (each one of which is already complex!) is differentiated into male and female, adult and child, man, woman, boy, and girl, etc.

When we start dealing with constructs, we move from an Aristotelian logic, where "not male," for example, encompasses the entire universe other than males, to a more efficient logic, where "not male" is understood as referring to "female," i.e. to the other half of the gender contrast.

It must be understood that, despite the linguistic roots of the model, constructs (et al.) are not necessarily tied to words. An animal contrasts dangerous and safe situations, and infant knows its mother from strangers, yet neither is capable of verbalizing its constructs. It is more than likely that most adult human constructs are equally non-verbal.

Networks Within Networks

There is, of course, no need to assume that the mind, or network model of the mind, needs to be of one piece. We have already made distinctions between pre- and post-anticipatory domains, between rule and non-rule systems, and between linguistic and general interaction. Figure 9 shows (in a highly schematic way) how some of these differentiations fit together, and suggests a few more.

In the lower portion of the diagram, we have the familiar linguistic network, with its layers of rule systems. On the left is the sensorimotor network, some of linguistic concern, most of more general concern. In the center is what we might call the general semantic network, the massive aspect of mind concerned with general interaction.

There are two other networks that suggest themselves. One, the propositional network, is essentially a continuation of the general semantic network, but lies beyond (to the right of) that network's interaction with linguistics. Unlike ideas in the semantic network, which come (from the linguistic network's perspective) with taxonomic definitions, ideas in this network are merely propositional, i.e. not necessary. "Cows are animals" is likely drawn from our semantic network; "Cows are brown" would be from the propositional network.

The other is the episodic network, which extends upward from the general semantic network as an extension of the linguistic. Episodic rule systems organize ideas into temporal sequences (episodes). Like literature or theater, our remembrances are often organized into scenes, acts, chapters, even plays or novels.

It is likely that sensations and actions enter into the episodic network as cues in the same way that linguistic cues enter the linguistic network. Natural breaks such as sleep and "scene changes" are likely candidates, as are clock and calendar cues. Most important might be the affect/problem cycle: The movement from calm to distressful problem to delightful resolution back to calm appears again and again in stories, histories, and our day-to-day organization of our own memories.

Unbridled Speculation

The way in which the semantic-propositional networks and the linguistic-episodic networks cross each other is terribly suggestive. Note that animals have little of the linguistic ability that we have, and, we presume, little of the episodic (i.e. personal) memory as well. They do have a general semantic network, of course, although the word semantic seems a bit too linguistic in this context. And that semantic network is completely undifferentiated from the propositional, because there is simply no language to differentiate them. Animals would presumably operate on beliefs as if they were definitions.

And note that, unlike most animals, we have some clear differentiation between the left and right hemispheres of the cerebral cortex. Could the left hemisphere be the realm of the episodic network -- i.e. personal memory -- as well as the linguistic one? Is the right hemisphere the more "animal" one? One cannot help but recall Jaynes' (1977) curious bicameral mind thesis.

The model also suggests that a close examination of the left hemisphere may reveal it to be, paradoxically, "simpler" than the right hemisphere. The linguistic network forces multidimensional cognition into a linear form; It seems only reasonable that the linguistic network's neurological counterpart have a similarly "linearizing" character. It may be a matter of left hemisphere neurons not connecting in as fully a multidimensional fashion as those in the right hemisphere. Complexity often evolves by means of fortuitous decreases in complexity!

Chomsky suggested a "language acquisition device." I suggest instead a different overall neurological character to at least a portion of the left hemisphere.

Adaptation

Please allow me just a little more "unbridled speculation." As it stands, this is still a rather static model of interaction. We have yet to discuss how the network develops, i.e. adaptation.

We must begin with a network with a vast number of nodes as yet undetermined as to type. Many, perhaps most, are destined to become nothing more than way stations along the lines. Only certain experiences at certain times can cause these "protonodes" to develop into the nodes we've been describing.

Here is a hypothetical description: Anticipation is failing. The existing structures are generating numbers of alternatives, including actions (muscular, glandular, or neurological) which inevitably involve conflict.

At this point, our description becomes easier if we switch into a more traditional physiological mode: This conflict causes the engagement of a system or systems which flood at least portions of the brain with certain neurochemicals, the effects of which are to allow the easier flow of signals across synaptic gaps, including and especially gaps that have had little previous use.

When our anticipatory dilemma is finally resolved, a bounce-back system floods the brain with other neurochemicals which act, like a photographer's fixative, to tighten the synaptic connections which were engaged just prior to the resolution.

One interesting effect this hypothetical process would have is that, in learning relatively complex tasks, learning would be step-like and "backwards:" The last behavior of a sequence leading to problem resolution should be the first one learned; The second trial should consist of the "groping" for solutions as in the first, but more quickly resolved by that last behavior, which reinforces the next-to-last behavior, and so on.

Endorphin research offers some support. For example, attention in rats increases with naloxone injection (Segal, Browne, Bloom, Ling, and Guillemin, 1977), and learning is facilitated by a following morphine injection (Stein and Belluzzi, 1978). This is not only compatible with our adaptation hypothesis; It is also compatible with the model of affect presented earlier. And it suggests the delightful possibility that traditional reinforcement is only a supplement to a natural "stamping in" process!

Conclusions

After all this speculation, let me restate the main point of this paper: Anticipation sits at the intersection of teleological psychology and traditional deterministic psychology. In one direction, it forms the basis of purpose, value, morality, freedom, and other concepts that, while difficult to deal with in traditional ways, are the mainstay of much of our thinking regarding personality, development, social interaction, education, and therapy.

Looking in the other direction, however, we can see that anticipation arises from certain simple, quite causal, systems. I hope I have also shown that these systems may be modeled with networks, and that the implications are as interesting as they are various.

References

Bower, T. G. R. and Wishart, J. G. (1972). The effects of motor skills on object permanence. Cognition, 1, 165-172.

Festinger, L. (1957). A theory of cognitive dissonance. Stanford: Stanford University Press.

Hjelmslev, L. (1961). Prolegomena to a theory of language (F. J. Whitfield, Trans.). Madison: University of Wisconsin Press. (Original work published 1943.)

Jaynes, J. (1977). The origins and history of consciousness in the breakdown of the bicameral mind. Boston: Houghton Mifflin.

Konorski, J. (1967). Integrative activity of the brain. Chicago: University of Chicago Press.

Kelly, G. (1955). The psychology of personal constructs (2 vols.). N.Y.: Horton.

Lamb, S. M. (1966). Outline of stratificational grammar. Washington, D.C.: Georgetown University Press.

Leech, G. N. (1974). Semantics. Baltimore: Penguin.

Lockwood, D. G. (1972). Introduction to stratificational linguistics. N.Y.: Harcourt Brace Jovanovich.

Lockwood, D. G. (1973). The problem of inflexional morphemes. In A. Makkai and D. G. Lockwood (Eds.), Readings in stratificational linguistics. University: University of Alabama Press.

Neisser, U. (1976). Cognition and reality: Principles and implications of cognitive psychology. San Francisco: Freeman.

Spinoza, B. (1930). Selections (J. Wild, Ed. and Trans.). N.Y.: Charles Schribner's Sons.

Tomkins, S. S. (1962, 1963). Affect, imagery, and consciousness (2 vols.). N.Y.: Springer.

Copyright 1998, C. George Boeree. Written 1991. Based on Masters Thesis, Oklahoma State University, 1979.