Behaviorism

Dr. C. George Boeree

Behaviorism

Dr. C. George Boeree

Behaviorism is the philosophical position that says that psychology, to be a science, must focus its attentions on what is observable -- the environment and behavior -- rather than what is only available to the individual -- perceptions, thoughts, images, feelings.... The latter are subjective and immune to measurement, and therefore can never lead to an objective science.

The first behaviorists were Russian. The very first was Ivan M. Sechenov (1829 to 1905). He was a physiologist who had studied at the University of Berlin with famous people like Müller, Du Bois-Reymond, and Helmholtz. Devoted to a rigorous blend of associationism and materialism, he concluded that all behavior is caused by stimulation.

In 1863, he wrote Reflexes of the Brain. In this landmark book, he introduced the idea that there are not only excitatory processes in the central nervous system, but inhibitory ones as well.

Vladimir M. Bekhterev (1857 to 1927) is another early Russian behaviorist. He graduated in 1878 from the Military Medical Academy in St. Petersburg, one year before Pavlov arrived there. He received his MD in 1881 at the tender age of 24, then went to study with the likes of Du Bois-Reymond and Wundt in Berlin, and Charcot in France.

He established the first psychology lab in Russia at the university of Kazan in 1885, then returned to the Military Medical Academy in 1893. In 1904, he published a paper entitled "Objective Psychology," which he later expanded into three volumes.

He called his field reflexology, and defined it as the objective study of stimulus-response connections. Only the environment and behavior were to be discussed! And he discovered what he called the association reflex -- what Pavlov would call the conditioned reflex.

Ivan Pavlov

Which

brings us to the most famous of the Russian researchers, Ivan Petrovich

Pavlov (1849-1936). After studying for the priesthood, as had his

father, he switched to medicine in 1870 at the Military Medical Academy

in St. Petersburg. It should be noted that he walked from his

home

in Ryazan near Moscow hundreds of miles to St. Petersburg!

Which

brings us to the most famous of the Russian researchers, Ivan Petrovich

Pavlov (1849-1936). After studying for the priesthood, as had his

father, he switched to medicine in 1870 at the Military Medical Academy

in St. Petersburg. It should be noted that he walked from his

home

in Ryazan near Moscow hundreds of miles to St. Petersburg!

In 1879, he received his degree in natural science, and in 1883, his MD. He then went to study at the university of Leipzig in Germany. In 1890, he was offered a position as professor of physiology at his alma mater, the Military Medical Academy, which is where he spent the rest of his life. It was in 1900 that he began studying reflexes, especially the salivary response.

In 1904, he was awarded the Nobel Prize in physiology for his work on digestion, and in 1921, he received the Hero of the Revolution Award from Lenin himself.

Pavlovian (or classical) conditioning builds on reflexes: We begin with an unconditioned stimulus and an unconditioned response -- a reflex! We then associate a neutral stimulus with the reflex by presenting it with the unconditioned stimulus. Over a number of repetitions, the neutral stimulus by itself will elicit the response! At this point, the neutral stimulus is renamed the conditioned stimulus, and the response is called the conditioned response.

Or, to put it in the form that Pavlov observed in his dogs, some meat powder on the tongue makes a dog salivate. Ring a bell at the same time, and after a few repetitions, the dog will salivate upon hearing the bell alone -- without being given the meat powder!

Pavlov agreed with Sechenov that there was inhibition as well as excitation. When the bell is rung many times with no meat forthcoming, the dog eventually stops salivating at the sound of the bell. That’s extinction. But, just give him a little meat powder once, and it is as if he had never had the behavior extinguished: He is right back to salivating to the bell. This spontaneous recovery strongly suggests that the habit has been there all alone. The dog had simply learned to inhibit his response.

Pavlov, of course, could therefore condition not only excitation but inhibition. You can teach a dog that he is NOT getting meat just as easily as you can teach him that he IS getting meat. For example, one bell could mean dinner, and another could mean dinner is over. If the bells, however, were too similar, or were rung simultaneously, many dogs would have something akin to a nervous breakdown, which Pavlov called an experimental neurosis.

In fact, Pavlov classified his dogs into four different personalities, à la the ancient Greeks: Dogs that got angry were choleric, ones that fell asleep were phlegmatic, ones that whined were melancholy, and the few that kept their spirits up were sanguine! The relative strengths of the dogs’ abilities to activate their nervous system and calm it back down (excitation and inhibition) were the explanations. These explanations would be used later by Hans Eysenck to understand the differences between introverts and extraverts!

Another set of terms that comes from Pavlov are the first and second signal systems. The first signal system is where the conditioned stimulus (a bell) acts as a “signal” that an important event is to occur -- i.e. the unconditioned stimulus (the meat). The second signal system is when arbitrary symbols come to stand for stimuli, as they do in human language.

Edward Lee Thorndike

Over in America, things were happening as well. Edward Lee Thorndike, although technically a functionalist, was setting the stage for an American version of Russian behaviorism. Thorndike (1874-1949) got his bachelors degree from Wesleyan University in Connecticut in 1895 and his masters from Harvard in 1897. While there he took a class from William James and they became fast friends. He received a fellowship at Columbia, and got his PhD in 1898. He stayed to teach at Columbia until he retired in 1940.

He will always be remembered for his cats and his poorly constructed “puzzleboxes.” These boxes had escape mechanisms of various complexities that required that the cats do several behaviors in sequence. From this research, he concluded that there were two laws of learning:

1. The law of exercise, which is basically the same as Aristotle’s law of frequency. The more often an association (or neural connection) is used, the stronger the connection. Naturally, the less it is used, the weaker the connection. These two were referred to as the law of use and disuse respectively.

2. The law of effect. When an association is followed by a “satisfying state of affairs,” the connection is strengthened. And, likewise, when an association is followed by an unsatisfying state of affairs, it is weakened. Except for the mentalistic language (“satisfying” is not behavioral!), it is the same thing as Skinner’s operant conditioning.

In 1929, his research led him to abandon all of the above except what we would now call reinforcement (the first half of law 2).

He is also known for his study of transfer of training. It was believed back then (and is still often believed) that studying difficult subjects -- even if you would never use them -- was good for you because it “strengthened” your mind, sort of like exercise strengthens your muscles. It was used back then to justify making kids learn Latin, just like it is used today to justify making kids learn calculus. He found, however, that it was only the similarity of the second subject to the first that leads to improved learning in the second subject. So Latin may help you learn Italian, or algebra may help you learn calculus, but Latin won’t help you learn calculus, or the other way around.

John Broadus Watson

John

Watson was born January 9, 1878 in a small town outside Greenville,

South

Carolina. He was brought up on a farm by a fundamentalist mother

and a carousing father. When John was 12, they moved into the

town

of Greenville, but a year later his father left the family. John

became a troublemaker and barely passed in school.

John

Watson was born January 9, 1878 in a small town outside Greenville,

South

Carolina. He was brought up on a farm by a fundamentalist mother

and a carousing father. When John was 12, they moved into the

town

of Greenville, but a year later his father left the family. John

became a troublemaker and barely passed in school.

At 16, he began attending Furman University, also in Greenville, and he graduated at 22 with a Masters degree. He then went on to the University of Chicago to study under John Dewey. He found Dewey “incomprehensible” and switched his interests from philosophy to psychology and neurophysiology. Dirt poor, he worked his way through graduate school by waiting tables, sweeping the psych lab, and feeding the rats.

In 1902 he suffered from a “nervous breakdown” which had been a long time coming. He had suffered from an intense fear of the dark since childhood -- due to stories he had heard in childhood about the devil doing his work in the night -- and this exacerbated into depression.

Nevertheless, after some rest, he finished his PhD the following year, got an assistantship with his professor, the respected functionalist James Angell, and married a student in his intro psych class, Mary Ickes. They would go on to have two children. (The actress Mariette Hartley is his granddaughter.)

The following year, he was made an instructor. He developed a well-run animal lab where he worked with rats, monkeys, and terns. Johns Hopkins offered him a full professorship and a laboratory in 1908, when the previous professor was caught in a brothel.

In 1913, he wrote an article called "Psychology as a Behaviorist Views It" for Psychological Review. Here, he outlined the behaviorist program. This was followed in the following year by the book Behaviorism: An introduction to comparative psychology. In this book, he pushed the study of rats as a useful model for human behavior. Until then, rat research was not thought of as significant for understanding human beings. And, by 1915, he had absorbed Pavlov and Bekhterev’s work on conditioned reflexes, and incorporated that into his behaviorist package.

In 1917, he was drafted into the army, where he served until 1919. In that year, he came out with the book Psychology from the Standpoint of a Behaviorist -- basically an expansion of his original article.

At this time, he expanded his lab work to include human infants. His best known experiment was conducted in 1920 with the help of his lab assistant Rosalie Rayner. “Little Albert," a 9 month old child, was conditioned to fear a white rat by pairing it seven times with a loud noise made by hitting a steel bar with a hammer. His fear quickly generalized to a rabbit, a fur coat, a Santa Claus mask, and even cotton. Albert was never "deconditioned" because his mother and he moved away. It was clear, however, that the conditioning tended to disappear (extinguish) rather quickly, so we assume that Albert was soon over his fear. This suggests that conditioned fear is not really the same as a phobia. Later, another child, three year old Peter, was conditioned and then “de-conditioned” by pairing his fear of a rabbit with milk and cookies and other positive things gradually.

In that year, his affair with his lab assistant was revealed and his wife sued for divorce. The administration at Johns Hopkins asked him for his resignation. He immediately married Rosalie Rayner and began looking for business opportunities.

He soon found himself working for the V. Walter Thompson advertising agency. He worked in a great variety of positions within the company, and was made vice president in 1924. By all standards of the time, he was very successful and quite rich! He increased sales of such items as Pond’s cold cream, Maxwell House coffee, and Johnson’s baby powder, and is thought to have invented the slogan “LSMFT -- Lucky Strikes Means Fine Tobacco.”

He published his book Behaviorism, designed for the average reader, in 1925, and revised it in 1930. This was his final statement of his position.

Psychology according to Watson is essentially the science of stimuli and responses. We begin with reflexes and, by means of conditioning, acquire learned responses. Brain processes are unimportant (he called the brain a “mystery box”). Emotions are bodily responses to stimuli. Thought is subvocal speech. Consciousness is nothing at all.

Most importantly, he denied the existence of any human instincts, inherited capacities or talents, and temperaments. This radical environmentalism is reflected in what is perhaps his best known quote:

Give me a dozen healthy infants, well-formed, and my own specified world to bring them up in and I’ll guarantee to take any one at random and train him to become any type of specialist I might select -- doctor, lawyer, artist, merchant-chief and, yes, even beggar-man and thief, regardless of his talents, penchants, tendencies, abilities, vocations, and race of his ancestors. (In Behaviorism, 1930)In addition to writing popular articles for McCall’s, Harper’s, Collier’s and other magazines, he published Psychological Care of Infant and Child in 1928. Among other things, he saw parents as more likely than not to ruin their child’s healthy development, and argued particularly against too much hugging and other demonstrations of affection!

In 1936, he was hired as vice-president of another agency, William Esty and Company. He devoted himself to business until he retired ten years later. He died in New York City on September 25, 1958.

William McDougall

William McDougall doesn't belong in this

chapter, really. But

his dislike for Watson's brand of behaviorism and his efforts against

it

warrant his inclusion. He was born June 22, 1871 in Lancashire,

England.

He entered the University of Manchester at 15, and received his

medical

degree from St. Thomas's Hospital in London, in 1897. He usually

referred to himself as an anthropologist, especially after a

one-year

Cambridge University expedition to visit the tribes of central Borneo.

William McDougall doesn't belong in this

chapter, really. But

his dislike for Watson's brand of behaviorism and his efforts against

it

warrant his inclusion. He was born June 22, 1871 in Lancashire,

England.

He entered the University of Manchester at 15, and received his

medical

degree from St. Thomas's Hospital in London, in 1897. He usually

referred to himself as an anthropologist, especially after a

one-year

Cambridge University expedition to visit the tribes of central Borneo.

From 1898, McDougall held lectureships in Cambridge and Oxford. His reputation developed in England with the publication of several texts, including Introduction to Social Psychology in 1908 and Body and Mind in 1911. In 1912, he was made a Fellow of the Royal Society.

During WWI, he served in the medical corps, treating soldiers suffering from "shell shock," what we now call post-traumatic stress syndrome. After the war, he himself received therapy from Carl Jung!

He was offered a position as Professor of Psychology at Harvard in 1920. He considered himself a follower of William James, so he took this as a great honor. In that same year, he published The Group Mind, followed in 1923 by the Outline of Psychology.

In 1924, he participated in The Battle of Behaviorism (published in 1929). This was a debate with John Watson at the Psychology Club meeting in Washington DC that year. The audience narrowly voted McDougall the winner, but it would be Watson who would win the favor of American psychology for years to come!

McDougall resigned from his position as chair of Psychology at Harvard in 1926, and began teaching at Duke University in 1927. It should be noted that he had a particular strong relationship with his wife, and in 1927 dedicated his book Character and the Conduct of Life to her with these words: "To my wife, to whose intuitive insight I owe whatever understanding of human nature I have acquired." He died in 1938.

McDougall was an hereditarian to the end, promoting a psychology based on instincts. He himself referred to his position as evolutionary psychology. Further, he was the leading critic of the behaviorism of his day. He particularly hated Watson's simplistic materialism.

McDougall was not well like by his students or by his colleagues. The American press (notably the New York Times) was particularly antagonistic towards him. The reasons were clear: McDougall took his hereditarian position at a time when the environmental position ruled American psychology and popular opinion. He called himself a "democratic elitist" and considered a nation's intellectual aristocracy a treasure which should be protected. Further, he believed in the hereditary nature of group differences, both national and racial, and proposed the institution of eugenic programs. In his defense, however, he had no sympathy with Nazism and its version of eugenics!

McDougall has been largely forgotten -- until recently, with genetics and evolutionary psychology on the rise.

McDougall saw Instincts as having three components:

Here is a list of instincts and accompanying emotions:

Clark Hull

Clark Leonard Hull was born May 24, 1884 near

Akron, New York, to a

poor, rural family. His was educated in a one-room school house

and

even taught there one year, when he was only 17. While a student,

he had a brush with death from typhoid fever.

Clark Leonard Hull was born May 24, 1884 near

Akron, New York, to a

poor, rural family. His was educated in a one-room school house

and

even taught there one year, when he was only 17. While a student,

he had a brush with death from typhoid fever.

He went on to Alma College in Michigan to study mining engineering. He worked for a mining company for two months when he developed polio. This forced him to look for a less strenuous career. For two years, he was principal of the same school he had gone to as a child -- now consisting of two rooms! He read William James and saved up his money to go to the University of Michigan.

After graduating, he taught for a while, then went on to the University of Wisconsin. He got his PhD there in 1918, and stayed to teach until 1929. This was where his ideas on a behavioristic psychology were formed.

In 1929, he became a professor of psychology at Yale. In 1936, he was elected president of the APA. He published his masterwork, Principles of Behavior, in 1943. In 1948, he had a massive heart attack. Nevertheless, he managed to finish a second book, A Behavior System, in that same year. He died of a second heart attack May 10, 1952.

Hull’s theory is characterized by very strict operationalization of variables and a notoriously mathematical presentation. Here are the variables Hull looked at when conditioning rats:

Independent variables:

S, the physical stimulus.

Time of deprivation or the period and intensity of painful stimuli.

G, the size and quality of the reinforcer.

The time delay between the response and the reinforcer.

The time between the conditioned and unconditioned stimulus.

N, the number of trials.

The amount of time the rat had been active.

The intervening variables:

s, the stimulus trace.

V, the stimulus intensity dynamism.

D, the drive or primary motivation or need (dependent on

deprivation,

etc.).

K, incentive motivation (dependent on the amount or quality

of reinforcer).

J, the incentive based on delay of reinforcement.

sHr, habit strength, based on N, G (or K), J, and time between

conditioned and unconditioned stimulus.

Ir, reactive inhibition (e.g. exhaustion because the rat had

been active for some time).

sIr, conditioned inhibition (due to other training).

sLr, the reaction threshold (minimum reinforcement required

for any learning).

sOr, momentary behavioral oscillation -- i.e. random variables

not otherwise accounted for.

And the main intervening variable, sEr, excitatory potential,

which is the result of all the above...

sEr = V x D x K x J x sHr - sIr - Ir - sOr - sLr.

The dependent variables:

Latency (speed of the response).

Amplitude (the strength of the response).

Resistance to extinction.

Frequency (the probability of the response.

All of which are measures of R, the response, which is a function of sEr.

Whew!

The essence of the theory can be summarized by saying that the response is a function of the strength of the habit times the strength of the drive. It is for this reason that Hull’s theory is often referred to as drive theory.

Hull was the most influential behaviorist of the 1940’s and 50’s. His student, Kenneth W. Spence, maintained that popularity through much of the 1960’s. But the theory, acceptable in its abbreviated form, was too unwieldy in the opinion of other behaviorists, and could not easily generalize from carefully controlled rat experiments to the complexities of human life. It is now a matter of historical interest only.

E. C. Tolman

A very different theory would also have some

popularity before the behaviorism

left the experimental scene to the cognitivists: The cognitive

behaviorism

of Edward Chase Tolman. E. C. was born April 14, 1886 in Newton,

Mass. His father was a businessman, his mother a housewife and

fervent

Quaker. He and his older brother attended MIT. His brother

went on to become a famous physicist.

A very different theory would also have some

popularity before the behaviorism

left the experimental scene to the cognitivists: The cognitive

behaviorism

of Edward Chase Tolman. E. C. was born April 14, 1886 in Newton,

Mass. His father was a businessman, his mother a housewife and

fervent

Quaker. He and his older brother attended MIT. His brother

went on to become a famous physicist.

E. C. was strongly influenced by reading William James, so in 1911 he went to graduate school at Harvard. While there, he spent a summer in Germany studying with Kurt Koffka, the Gestalt psychologist. He received his PhD in 1915.

He went off to teach at Northwestern University. But he was a shy teacher, and an avowed pacifist during World War I, and the University dismissed him in 1918. He went to teach at the University of California at Berkeley. He also served in the OSS (Office of Strategic Services) for two years during World War II.

The University of California required loyalty oaths of the professors there (inspired by Joseph McCarthy and the “red scare”). Tolman led protests and was summarily suspended. The courts found in his favor and he was reinstated. In 1959 he retired, and received an honorary doctorate from the same University of California at Berkeley! Unfortunately, he died the same year, on November 19.

Although he appreciated the behaviorist agenda for making psychology into a true objective science, he felt Watson and others had gone too far.

1. Watson’s behaviorism was the study of “twitches” -- stimulus-response is too molecular a level. We should study whole, meaningful behaviors: the molar level.

2. Watson saw only simple cause and effect in his animals. Tolman saw purposeful, goal-directed behavior.

3. Watson saw his animals as “dumb” mechanisms. Tolman saw them as forming and testing hypotheses based on prior experience.

4. Watson had no use for internal, “mentalistic” processes. Tolman demonstrated that his rats were capable of a variety of cognitive processes.

An animal, in the process of exploring its environment, develops a cognitive map of the environment. The process is called latent learning, which is learning in the absence of rewards or punishments. The animals develops expectancies (hypotheses) which are confirmed or not by further experience. Rewards (and punishments) come into play only as motivators for performance of a learned behavior, not as the causes of learning itself.

He himself acknowledged that his behaviorism was more like Gestalt psychology than like Watson’s brand of behaviorism. From our perspective today, he can be considered one of the precursors of the cognitive movement.

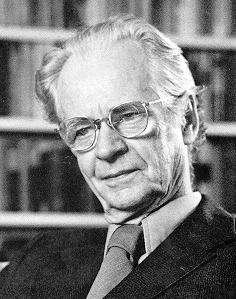

B. F. Skinner

Burrhus Frederic Skinner was born March 20, 1904, in the small Pennsylvania town of Susquehanna. His father was a lawyer, and his mother a strong and intelligent housewife. His upbringing was old-fashioned and hard-working.

Burrhus was an active, out-going boy who loved the outdoors and building things, and actually enjoyed school. His life was not without its tragedies, however. In particular, his brother died at the age of 16 of a cerebral aneurysm.

Burrhus received his BA in English from Hamilton College in upstate New York. He didn’t fit in very well, not enjoying the fraternity parties or the football games. He wrote for the school paper, including articles critical of the school, the faculty, and even Phi Beta Kappa! To top it off, he was an atheist -- in a school that required daily chapel attendance.

He wanted to be a writer and did try, sending off poetry and short stories. When he graduated, he built a study in his parents’ attic to concentrate, but it just wasn’t working for him.

Ultimately, he resigned himself to writing newspaper articles on labor problems, and lived for a while in Greenwich Village in New York City as a “bohemian.” After some traveling, he decided to go back to school, this time at Harvard. He got his masters in psychology in 1930 and his doctorate in 1931, and stayed there to do research until 1936.

Also in that year, he moved to Minneapolis to teach at the

University

of Minnesota. There he met and soon married Yvonne Blue.

They

had two daughters, the second of whom became famous as the first

infant

to be raised in one of Skinner’s inventions, the air crib.

Although

it was nothing more than a  combination

crib and playpen with glass sides and air conditioning, it looked too

much

like keeping a baby in an aquarium to catch on.

combination

crib and playpen with glass sides and air conditioning, it looked too

much

like keeping a baby in an aquarium to catch on.

In 1945, he became the chairman of the psychology department at Indiana University. In 1948, he was invited to come to Harvard, where he remained for the rest of his life. He was a very active man, doing research and guiding hundreds of doctoral candidates as well as writing many books. While not successful as a writer of fiction and poetry, he became one of our best psychology writers, including the book Walden II, which is a fictional account of a community run by his behaviorist principles.

August 18, 1990, B. F. Skinner died of leukemia after becoming perhaps the most celebrated psychologist since Sigmund Freud.

Theory

B. F. Skinner’s entire system is based on operant conditioning. The organism is in the process of “operating” on the environment, which in ordinary terms means it is bouncing around its world, doing what it does. During this “operating,” the organism encounters a special kind of stimulus, called a reinforcing stimulus, or simply a reinforcer. This special stimulus has the effect of increasing the operant -- that is, the behavior occurring just before the reinforcer. This is operant conditioning: “the behavior is followed by a consequence, and the nature of the consequence modifies the organism's tendency to repeat the behavior in the future.”

Imagine a rat in a cage. This is a special cage (called, in fact, a “Skinner box”) that has a bar or pedal on one wall that, when pressed, causes a little mechanism to release a food pellet into the cage. The rat is bouncing around the cage, doing whatever it is rats do, when he accidentally presses the bar and -- hey, presto! -- a food pellet falls into the cage! The operant is the behavior just prior to the reinforcer, which is the food pellet, of course. In no time at all, the rat is furiously pedaling away at the bar, hoarding his pile of pellets in the corner of the cage.

A behavior followed by a reinforcing stimulus results in an increased probability of that behavior occurring in the future.

What if you don’t give the rat any more pellets? Apparently, he’s no fool, and after a few futile attempts, he stops his bar-pressing behavior. This is called extinction of the operant behavior.

A behavior no longer followed by the reinforcing stimulus results in a decreased probability of that behavior occurring in the future.

Now, if you were to turn the pellet machine back on, so that pressing the bar again provides the rat with pellets, the behavior of bar-pushing will “pop” right back into existence, much more quickly than it took for the rat to learn the behavior the first time. This is because the return of the reinforcer takes place in the context of a reinforcement history that goes all the way back to the very first time the rat was reinforced for pushing on the bar!

Schedules of reinforcement

Skinner likes to tell about how he "accidentally" -- i.e. operantly -- came across his various discoveries. For example, he talks about running low on food pellets in the middle of a study. Now, these were the days before “Purina rat chow” and the like, so Skinner had to make his own rat pellets, a slow and tedious task. So he decided to reduce the number of reinforcements he gave his rats for whatever behavior he was trying to condition, and, lo and behold, the rats kept up their operant behaviors, and at a stable rate, no less. This is how Skinner discovered schedules of reinforcement!

Continuous reinforcement is the original scenario: Every time that the rat does the behavior (such as pedal-pushing), he gets a rat goodie.

The fixed ratio schedule was the first one Skinner discovered: If the rat presses the pedal three times, say, he gets a goodie. Or five times. Or twenty times. Or “x” times. There is a fixed ratio between behaviors and reinforcers: 3 to 1, 5 to 1, 20 to 1, etc. This is a little like “piece rate” in the clothing manufacturing industry: You get paid so much for so many shirts.

The fixed interval schedule uses a timing device of some sort. If the rat presses the bar at least once during a particular stretch of time (say 20 seconds), then he gets a goodie. If he fails to do so, he doesn’t get a goodie. But even if he hits that bar a hundred times during that 20 seconds, he still only gets one goodie! One strange thing that happens is that the rats tend to “pace” themselves: They slow down the rate of their behavior right after the reinforcer, and speed up when the time for it gets close.

Skinner also looked at variable schedules. Variable ratio means you change the “x” each time -- first it takes 3 presses to get a goodie, then 10, then 1, then 7 and so on. Variable interval means you keep changing the time period -- first 20 seconds, then 5, then 35, then 10 and so on.

In both cases, it keeps the rats on their rat toes. With the variable interval schedule, they no longer “pace” themselves, because they no can no longer establish a “rhythm” between behavior and reward. Most importantly, these schedules are very resistant to extinction. It makes sense, if you think about it. If you haven’t gotten a reinforcer for a while, well, it could just be that you are at a particularly “bad” ratio or interval! Just one more bar press, maybe this’ll be the one!

This, according to Skinner, is the mechanism of gambling. You may not win very often, but you never know whether and when you’ll win again. It could be the very next time, and if you don’t roll them dice, or play that hand, or bet on that number this once, you’ll miss on the score of the century!

Shaping

A question Skinner had to deal with was how we get to more complex sorts of behaviors. He responded with the idea of shaping, or “the method of successive approximations.” Basically, it involves first reinforcing a behavior only vaguely similar to the one desired. Once that is established, you look out for variations that come a little closer to what you want, and so on, until you have the animal performing a behavior that would never show up in ordinary life. Skinner and his students have been quite successful in teaching simple animals to do some quite extraordinary things. My favorite is teaching pigeons to bowl!

I used shaping on one of my daughters once. She was about three or four years old, and was afraid to go down a particular slide. So I picked her up, put her at the end of the slide, asked if she was okay and if she could jump down. She did, of course, and I showered her with praise. I then picked her up and put her a foot or so up the slide, asked her if she was okay, and asked her to slide down and jump off. So far so good. I repeated this again and again, each time moving her a little up the slide, and backing off if she got nervous. Eventually, I could put her at the top of the slide and she could slide all the way down and jump off. Unfortunately, she still couldn’t climb up the ladder, so I was a very busy father for a while.

Beyond these fairly simple examples, shaping also accounts for the most complex of behaviors. You don’t, for example, become a brain surgeon by stumbling into an operating theater, cutting open someone's head, successfully removing a tumor, and being rewarded with prestige and a hefty paycheck, along the lines of the rat in the Skinner box. Instead, you are gently shaped by your environment to enjoy certain things, do well in school, take a certain bio class, see a doctor movie perhaps, have a good hospital visit, enter med school, be encouraged to drift towards brain surgery as a speciality, and so on. This could be something your parents were carefully doing to you, à la a rat in a cage. But much more likely, this is something that was more or less unintentional.

Aversive stimuli

An aversive stimulus is the opposite of a reinforcing stimulus, something we might find unpleasant or painful.

A behavior followed by an aversive stimulus results in a decreased probability of the behavior occurring in the future.

This both defines an aversive stimulus and describes the form of conditioning known as punishment. If you shock a rat for doing x, it’ll do a lot less of x. If you spank Johnny for throwing his toys he will throw his toys less and less (maybe).

On the other hand, if you remove an already active aversive stimulus after a rat or Johnny performs a certain behavior, you are doing negative reinforcement. If you turn off the electricity when the rat stands on his hind legs, he’ll do a lot more standing. If you stop your perpetual nagging when I finally take out the garbage, I’ll be more likely to take out the garbage (perhaps). You could say it “feels so good” when the aversive stimulus stops, that this serves as a reinforcer!

Behavior followed by the removal of an aversive stimulus results in an increased probability of that behavior occurring in the future.

Notice how difficult it can be to distinguish some forms of negative reinforcement from positive reinforcement: If I starve you, is the food I give you when you do what I want a positive -- i.e. a reinforcer? Or is it the removal of a negative -- i.e. the aversive stimulus of hunger?

Skinner (contrary to some stereotypes that have arisen about behaviorists) doesn’t “approve” of the use of aversive stimuli -- not because of ethics, but because they don’t work well! Notice that I said earlier that Johnny will maybe stop throwing his toys, and that I perhaps will take out the garbage? That’s because whatever was reinforcing the bad behaviors hasn’t been removed, as it would’ve been in the case of extinction. This hidden reinforcer has just been “covered up” with a conflicting aversive stimulus. So, sure, sometimes the child (or me) will behave -- but it still feels good to throw those toys. All Johnny needs to do is wait till you’re out of the room, or find a way to blame it on his brother, or in some way escape the consequences, and he’s back to his old ways. In fact, because Johnny now only gets to enjoy his reinforcer occasionally, he’s gone into a variable schedule of reinforcement, and he’ll be even more resistant to extinction than ever!

Behavior modification

Behavior modification -- often referred to as b-mod -- is the therapy technique based on Skinner’s work. It is very straightforward: Extinguish an undesirable behavior (by removing the reinforcer) and replace it with a desirable behavior by reinforcement. It has been used on all sorts of psychological problems -- addictions, neuroses, shyness, autism, even schizophrenia -- and works particularly well with children. There are examples of back-ward psychotics who haven’t communicated with others for years who have been conditioning to behave themselves in fairly normal ways, such as eating with a knife and fork, taking care of their own hygiene needs, dressing themselves, and so on.

There is an offshoot of b-mod called the token economy. This is used primarily in institutions such as psychiatric hospitals, juvenile halls, and prisons. Certain rules are made explicit in the institution, and behaving yourself appropriately is rewarded with tokens -- poker chips, tickets, funny money, recorded notes, etc. Certain poor behavior is also often followed by a withdrawal of these tokens. The tokens can be traded in for desirable things such as candy, cigarettes, games, movies, time out of the institution, and so on. This has been found to be very effective in maintaining order in these often difficult institutions.

There is a drawback to token economy: When an “inmate” of one of these institutions leaves, they return to an environment that reinforces the kinds of behaviors that got them into the institution in the first place. The psychotic’s family may be thoroughly dysfunctional. The juvenile offender may go right back to “the ’hood.” No one is giving them tokens for eating politely. The only reinforcements may be attention for “acting out,” or some gang glory for robbing a Seven-Eleven. In other words, the environment doesn’t travel well!

Walden II

Skinner started his career as an English major, writing poems and short stories. He has, of course, written a large number of papers and books on behaviorism. But he will probably be most remembered by the general run of readers for his book Walden II, wherein he describes a utopia-like commune run on his operant principles.

People, especially the religious right, came down hard on his book. They said that his ideas take away our freedom and dignity as human beings. He responded to the sea of criticism with another book (one of his best) called Beyond Freedom and Dignity. He asked: What do we mean when we say we want to be free? Usually we mean we don’t want to be in a society that punishes us for doing what we want to do. Okay -- aversive stimuli don’t work well anyway, so out with them! Instead, we’ll only use reinforcers to “control” society. And if we pick the right reinforcers, we will feel free, because we will be doing what we feel we want!

Likewise for dignity. When we say “she died with dignity,” what do we mean? We mean she kept up her “good” behaviors without any apparent ulterior motives. In fact, she kept her dignity because her reinforcement history has led her to see behaving in that "dignified" manner as more reinforcing than making a scene.

The bad do bad because the bad is rewarded. The good do good because the good is rewarded. There is no true freedom or dignity. Right now, our reinforcers for good and bad behavior are chaotic and out of our control -- it’s a matter of having good or bad luck with your “choice” of parents, teachers, peers, and other influences. Let’s instead take control, as a society, and design our culture in such a way that good gets rewarded and bad gets extinguished! With the right behavioral technology, we can design culture.

Both freedom and dignity are examples of what Skinner calls mentalistic constructs -- unobservable and so useless for a scientific psychology. Other examples include defense mechanisms, the unconscious, archetypes, fictional finalisms, coping strategies, self-actualization, consciousness, even things like hunger and thirst. The most important example is what he refers to as the homunculus -- Latin for “the little man” -- that supposedly resides inside us and is used to explain our behavior, ideas like soul, mind, ego, will, self, and, of course, personality.

Instead, Skinner recommends that psychologists concentrate on observables, that is, the environment and our behavior in it.

Skinner was to enjoy considerable popularity during the 1960's and even into the 70's. But both the humanistic movement in the clinical world, and the cognitive movement in the experimental world, were quickly moving in on his beloved behaviorism. Before his death, he publicly lamented the fact that the world had failed to learn from him.

Copyright 1998, 2000, C. George Boeree